What Takes Your Team Days, AI Does in Minutes

What used to require a security expert, performance engineer, senior dev, and architect — now happens in one automated review.

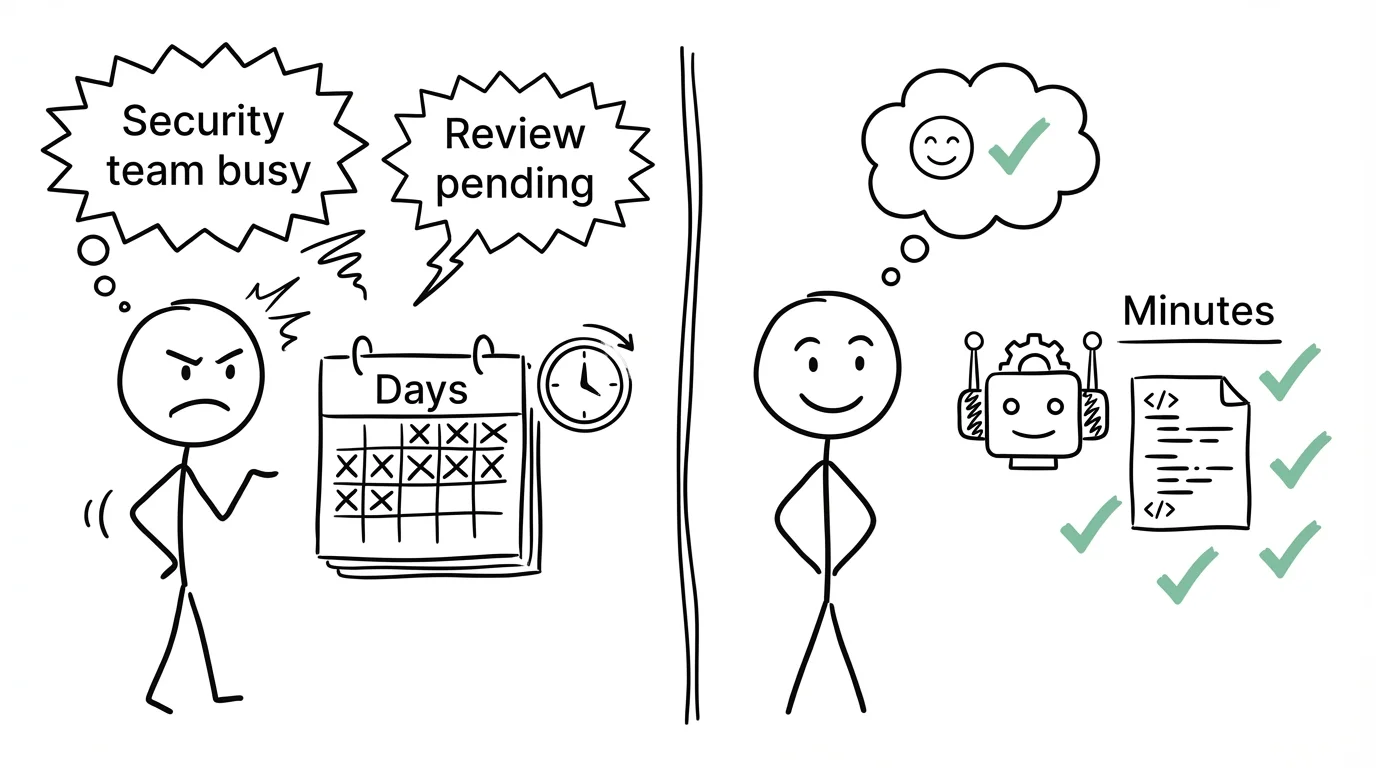

The Problem: Comprehensive Code Review Takes Days

Getting a thorough code review means waiting for multiple people:

- Your security expert checks for vulnerabilities — but she's booked until Thursday

- The performance expert finds slow code — but he's reviewing another team's work

- Your senior dev catches logic bugs — but she's in meetings all day

- The tech lead ensures code quality — but he's on vacation

The result? Your code sits waiting for 2-3 days. Or worse — you ship it without full review and bugs reach your users.

This is the hidden cost of comprehensive review:

- Scheduling hassle: Getting 4 busy experts to review one code change

- Getting up to speed: Each reviewer spends 20 minutes understanding your changes

- Waiting in line: One review has to finish before the next can start

- Inconsistent attention: Friday reviews get less focus than Monday ones

Every day blocked is progress lost. Every skipped perspective is risk shipped.

The Solution: Four AI Specialists Working in Parallel

What if you could have four expert reviewers analyze your code simultaneously — in minutes, not days?

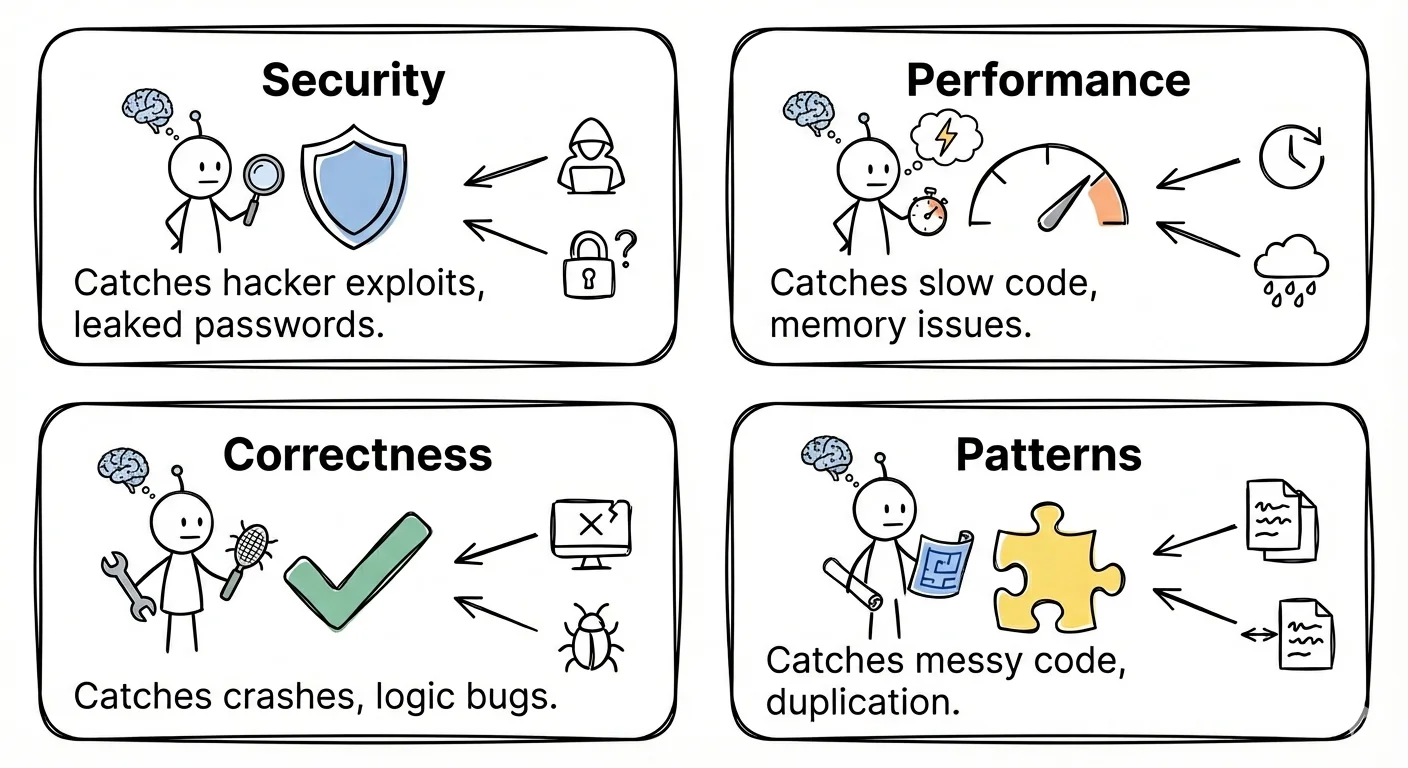

CloudThinker deploys four specialized AI agents in parallel—each focused on one domain:

The math is simple:

- Human review: 4 experts × 2-3 days coordination = days of waiting

- AI review: 4 specialists × parallel execution = minutes

No scheduling conflicts. No context switching. No waiting for the security team. Four deep analyses running simultaneously — in the time it takes to grab coffee.

The Proof: Independent Benchmark

We tested 6 AI code review tools on 37 real-world bugs from production codebases:

| Tool | Detection Rate |

|---|---|

| CloudThinker | 81% |

| Greptile | 78% |

| Copilot | 48% |

| CodeRabbit | 46% |

| Cursor | 54% |

| Graphite | 8% |

How We Tested

We followed Greptile's benchmark methodology using 37 real production bugs from 4 major open-source projects: Cal.com (TypeScript), Sentry (Python), Grafana (Go), and Keycloak (Java).

Each bug was a real defect that shipped to production. We excluded trivial issues and cases where fixes were ambiguous or disputed — ensuring every test case has a clear, verified solution.

A bug counts as "caught" only when the tool points to the faulty line and explains the impact. All tools ran with default settings and full repository context.

Works With Your Workflow

- GitLab & GitHub — connects in minutes

- Automatic review on every code change

- Comments directly on your code with priority levels

No new tools. No workflow changes. Just better reviews.

Stop Waiting. Start Shipping.

Your team shouldn't spend days coordinating reviews. Your code changes shouldn't sit blocked while experts are in meetings. Your users shouldn't be the ones who find the bugs.

Four perspectives. Minutes, not days. Every code change.

What used to require a security engineer, performance specialist, senior dev, and tech lead — now happens automatically, in parallel, before your coffee gets cold.